Ecovative is a company at the forefront of innovation by reimagining our world with products that are “grown” rather than “machined”. Opening up these materials to designers and architects is allowing for an ecology revolution where the harmful, toxic, wasteful industrial processes of the 20th century are being supplanted by green, virtually waste-less systems. This may be the key to the future of sustainable design and construction.

Ecovative embodies their mission to “rid the world of toxic, unsustainable materials” by propagating the GIY movement. Just as the DIY movement has revolutionized open-source physical computing and hardware, the Grow It Yourself movement is making biology open-source and has even started a bio-hacking subculture. Ecovative sells mushroom material kits allowing everyone to experiment in growing their own products.

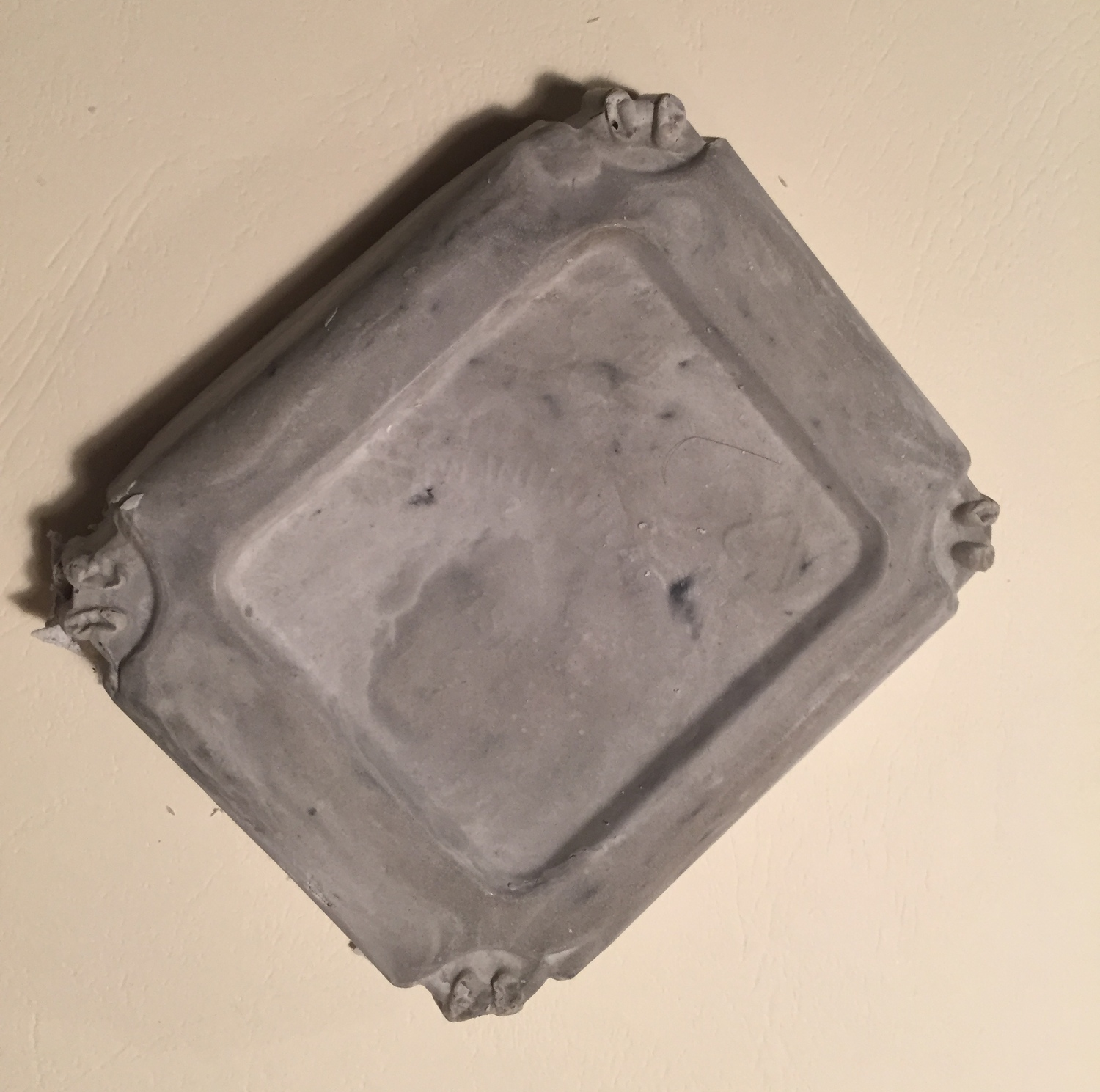

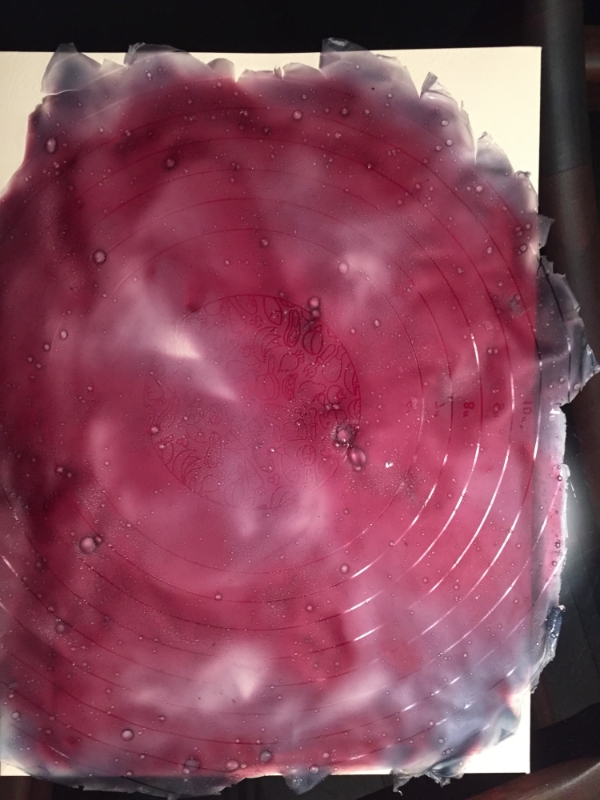

Using the Ecovative GIY kit, I made a number of prototypes as a proof-of-concept that anyone can grow their own materials with 0% pollution, 0% waste and at a fraction of the cost of non-sustainable materials such as plastics.

Here are some photos of my process: